Emerging Trends in Applied Deep Learning Research

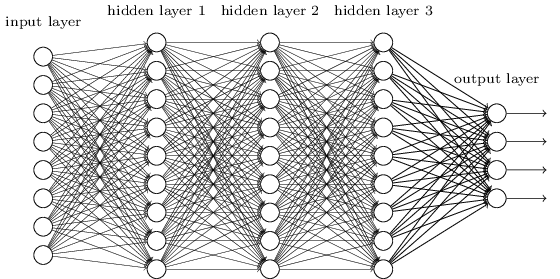

Sample neural network

Sample neural networkIntroduction

Scientists have dreamed for centuries to allow a machine to have intelligence like a human being. Not long after the initial invention of the electronic computer, in 1950, British scientist Alan Turing a paper entitled “ Computing Machinery and Intelligence”, which initially put forward an imaginary question: can a machine think like a human? To answer this question, generations of scientists have devised different approaches to study this problem, such as developing expert systems aiming to model the human expertise in certain fields.

Applications, such as medical diagnosis, can be processed by representing human knowledge and thus can be applied with the expert systems. However, there are excessive problems that are unable to be determined by simple facts and rules. For example, problems in distinguishing cats or dogs have baffled scientists for decades until the early 21st century. Human might determine the species of animals by their specific appearance, such as the shape of mouth, color of the hair, etc. If a computer follows the process of identifying objects by human, it would generate a lot of new problems, e.g. how to identify the shape of the object’s mouth or eye? What if two animals have similar hair color?

Then, a novel but not completely unorthodox idea was arose: instead of telling the machine how to do it, we can allow the machine to learn the rule by itself. We later called these kinds of method as machine learning. Although this idea might sound imaginary, it originated from statistical learning theory, which has been studied for over a century, long before the computer was invented. Statistical learning heavily relies on statistical modeling and mathematics algorithm, which can be considered as consisting of multiple mathematical functions, or in a statistician’s view, multiple (mixed) probability distributions. The statistical learning model tries to fit the given data as close as it can, as in middle school when our math teachers asked us to fit a linear function from given data points. When the data gets sufficiently large and the model fits the data cohesively, we generally believe that such statistical model can represent the pattern of particular dataset. Such statistical learning algorithms (e.g. Support Vector Machine, K-Nearest Neighboring, Gradient Boosting Algorithm) work really well in certain real-world problems that are applied in the giant industry to date. However, such methods are formulated on mathematics, making the argument for the scarcity of “intelligence”. Engineers should handcraft the features in the dataset, causing redundant laboring work. Moreover, for certain problems such as speech recognition, computer vision, it is hard to model such problems with statistical algorithms since these algorithms suffer from the explosive dimension, meaning the algorithms will perform badly when the input dimension is large.

Meanwhile, some scientists tried to devise models which simulate the thought processes of the human brain. Such models simulating the neural network in human’s brain, designed in 1957, was the embryonic form of modern deep learning model. After decades of efforts, LeCun et al. 1 provided the first practical demonstration of a neural network with back propagation onto read “handwritten” digits, suggesting that it is applicable on deploying deep neural network on real-life problems. The cornerstone of the new generation of deep learning started in 2006, when Hinton et al. 2 put forward the deep belief network, which allows machine to overcome previous limitations and train a deeper network. Empowered by a recent explosion of data in the internet era, deep learning exhibits its superior capability. In 2012, a deep learning model, AlexNet 3, achieved a error of 15.3% with more than 10.8 percentage point lower than the followers in the ImageNet Large Scale Visual Recognition Challenge, an image classification competition requiring competitors to classify millions of images into 1000 classifiers.

To date, deep learning has applied to almost every inch of our daily life. Probably the most prominent application in deep learning is computer vision (CV), which empowers computers to perceive the visual perception as human being do. Multiple autonomous car companies (such as Uber, Tesla, Waymo) are exploiting deep learning to detect pedestrians, cyclists, vehicles on their autonomous car. Computer vision technology also assists healthcare professionals saving patients’ lives by eliminating inaccurate diagnoses. Apart from computer vision, the deep reinforcement learning is another thriving approach which achieves tremendous success. The famous AlphaGo 4 has defeated two of the best Go player in human history. Empowered by deep reinforcement learning, the Go agent can make movements that human players will not ever consider. Moreover, natural language processing (NLP) is another well-investigated field for deep learning application. Enhanced by the recurrent neural network, Google Translator now achieves expert-level in translating human languages. The state-of-art NLP model, BERT 5, is reported to outperform human in various reading comprehension tasks.

In this report, I will describe the recent trends of applications in deep learning. Specifically, I will discuss three well-studied but promising areas in deep learning, transfer learning, reinforcement learning, recommender system. First, I will discuss the recent novel innovations as well as the challenges in these areas. I will also project the future trends in these areas and describe how such technology can empower society and human being. Although this report discusses state-of-the-art (SOTA) applications and technologies in deep learning, I attempt to write this for readers who have only minor background in artificial intelligence to have a basic understanding in this still developing areas in deep learning.

Acknowledge

This is the final report of ENG 3091, Fall 2019, Wenzhou-Kean University. I appreciate Dr.Wendy Austin for proofreading the report.

LeCun, Y.; Boser, B.; Denker, J. S.; Henderson, D.; Howard, R. E.; Hubbard, W.; and Jackel, L. D. 1989. Backpropagation applied to handwritten zip code recognition. Neural Computation 1(4):541–551. ↩︎

Hinton, G. E.; Osindero, S.; and Teh, Y.-W. 2006. A fast learning algorithm for deep belief nets. Neural computation 18(7):1527–1554. ↩︎

Krizhevsky, A.; Sutskever, I.; and Hinton, G. E. 2012. Imagenet classification with deep convolutional neural networks. In Advances in neural information processing systems, 1097–1105 ↩︎

Silver, D.; Huang, A.; Maddison, C. J.; Guez, A.; Sifre, L.; Van Den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. 2016. Mastering the game of go with deep neural networks and tree search. nature 529(7587):484. ↩︎

Devlin, J.; Chang, M.-W.; Lee, K.; and Toutanova, K. 2018. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805. ↩︎